Ph.D. Candidate: Mehmet Ali Arabacı

Program: Information Systems

Date: 18.01.2023 / 14:00

Place: A108

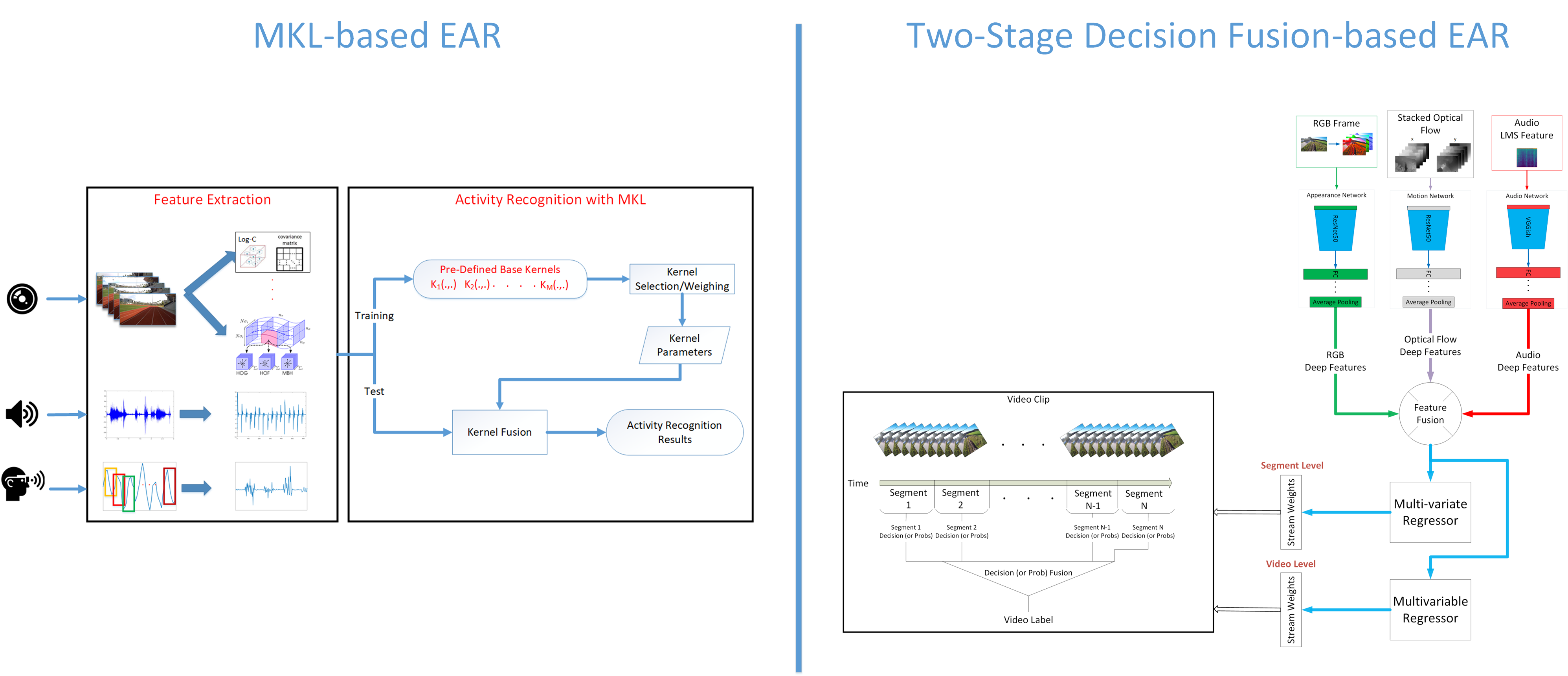

Abstract: The usage of wearable devices has rapidly grown in daily life with the development of sensor technologies. The most prominent information for wearable devices is collected from optics which produces videos from an egocentric perspective, called First Person Vision (FPV). FPV has different characteristics from third-person videos because of the large amounts of egomotions and rapid changes in scenes. Vision-based methods designed for third-person videos where the camera is away from events and actors, cannot be directly applied to egocentric videos. Therefore, new approaches, which are capable of analyzing egocentric videos and accurately fusing inputs from various sensors for specified tasks, should be proposed. In this thesis, we proposed two novel multi-modal decision fusion frameworks for egocentric activity recognition. The first framework combines hand-crafted features using Multi-Kernel Learning. The other framework utilizes deep features using a two-stage decision fusion mechanism. The experiments revealed that combining multiple modalities, such as visual, audio, and other wearable sensors, increased activity recognition performance. In addition, numerous features extracted from different modalities were evaluated within the proposed frameworks. Lastly, a new egocentric activity dataset, named Egocentric Outdoor Activity Dataset (EOAD), was populated, containing 30 different egocentric activities and 1392 video clips.