M.S. Candidate: Umut Güler

Program: Information Systems

Date: 20.01.2026 / 13:00

Place: A-212

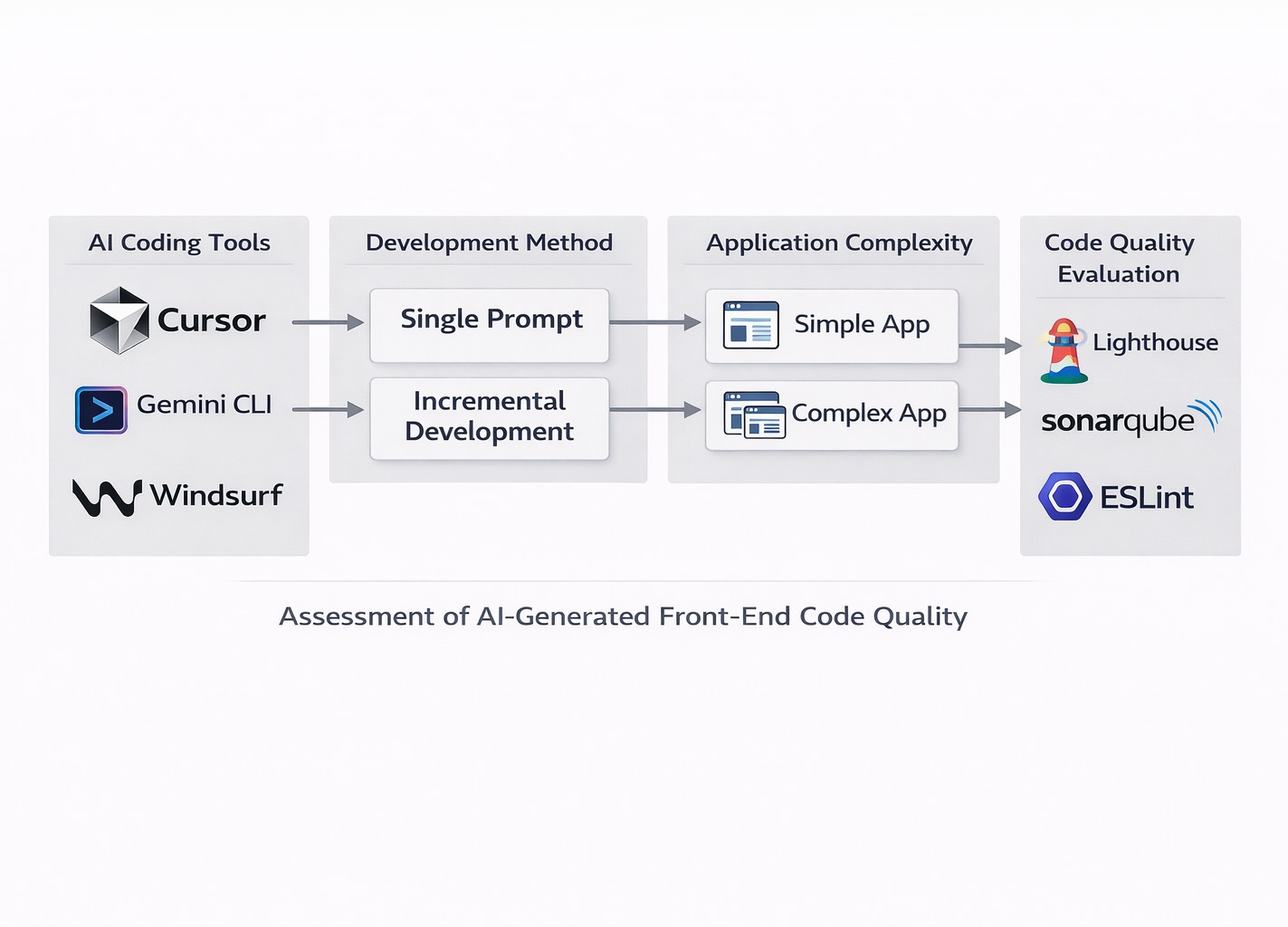

Abstract: The adoption of AI coding tools has significantly transformed software development practices, yet empirical evidence regarding the technical quality of AI-generated code and applications remains limited, particularly for modern front-end development. This thesis presents a comprehensive comparative evaluation of three commercially available AI coding tools—Cursor, Gemini CLI, and Windsurf—assessing their impact on the technical quality of front-end web applications developed using React, TypeScript, and Vite.

A controlled experiment is designed for the implementations. Two application scenarios are used: a simple To-Do application, and a relatively complex Marketplace application. Each application for each AI tool is developed using two methodologies: single prompt generation and incremental build with iterative prompts. The technical qualities of these codebases and applications are evaluated with three industry-standard tools: Lighthouse for runtime performance, accessibility, and web standards; SonarQube for maintainability and code quality; and ESLint for code style compliance. Bundle sizes and interactive correction requirements were also measured as complementary assets.

The results demonstrate that AI tools successfully generate functionally correct applications. However, quality outcomes vary significantly based on the AI tools, development methodology, and application complexity. It is observed that incremental development benefits the AI-generated applications consistently, regardless of the AI tool, in simple application scenarios. Conversely, complex applications exhibited significant quality degradations with incremental development, such as complexity increases and severe reliability drops for certain tool-methodology combinations.

The findings reveal that each tool demonstrates a distinct performance profile in specific scenarios and metrics; no single tool dominates across all quality dimensions. This research provides evidence-based guidance for tool selection and development methodology based on application complexity requirements.