M.S. Candidate: Ata Seren

Program: Cybersecurity

Date: 15.01.2026 / 10:00

Place: Cisco Lab

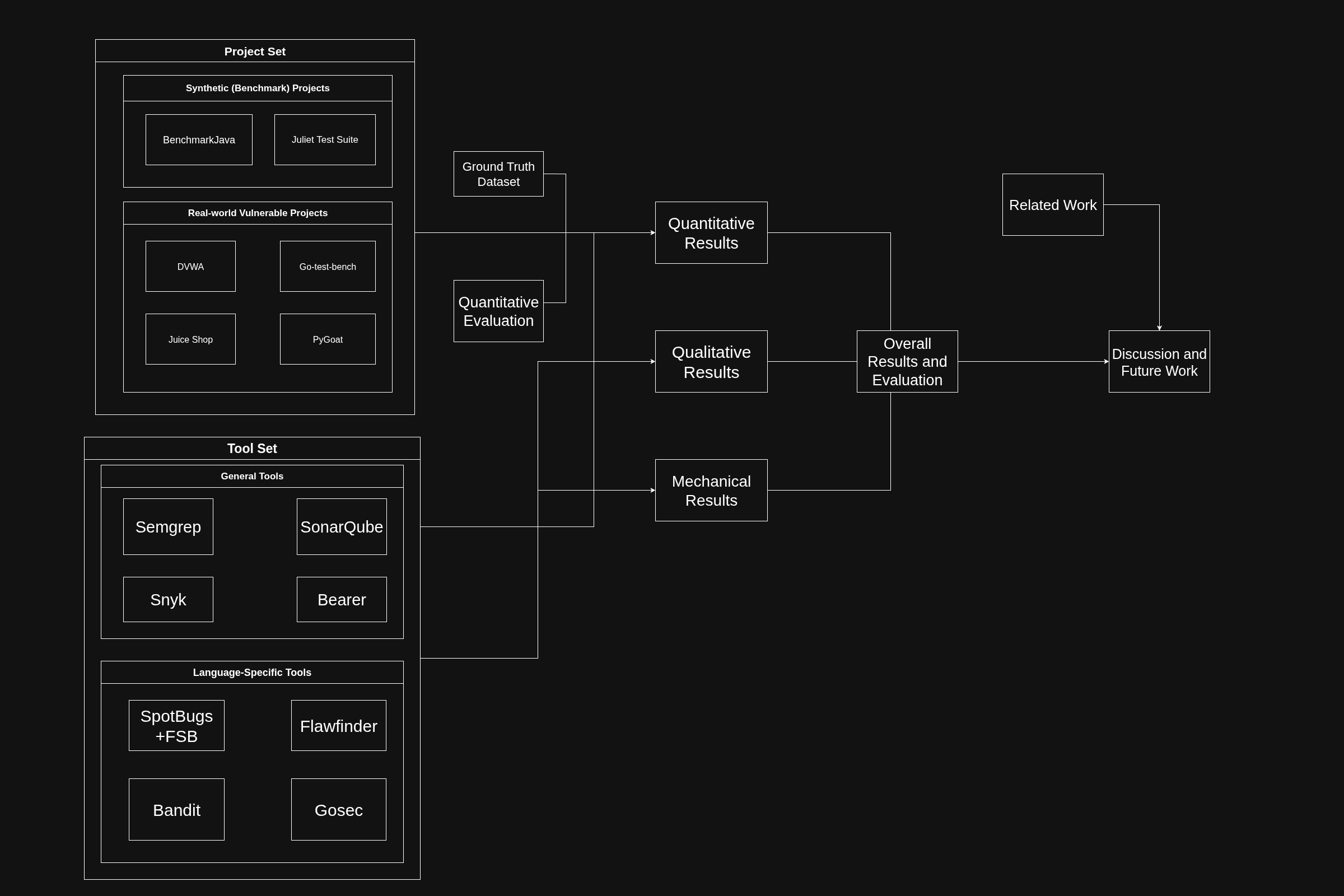

Abstract: Static Application Security Testing (SAST) tools play a critical role in identifying vulnerabilities during software development which enables early-stage security and mitigate security risks in production. However, their real-world effectiveness remains difficult to assess due to heavy reliance on synthetic benchmarks and aggregated evaluation metrics. This thesis examines the internal mechanisms underlying SAST tools, including how they parse source code, analyze syntactic and semantic structures and identify potential security weaknesses. A comparative analysis is conducted on a set of open-source SAST tools using a strict issue-level evaluation approach that measures performance based on individual actionable findings. The evaluation considers detection accuracy, false-positive/false-negative rates, performance efficiency and programming language coverage across both benchmark datasets and intentionally vulnerable real-world applications. In addition to quantitative measurements, qualitative and mechanism-oriented evaluations such as employed analysis techniques and additional tool features are included to contextualize the experimental results. The findings show that no evaluated SAST tool achieves consistently strong detection performance across all languages and datasets under realistic conditions. Tools optimized for syntactic pattern matching perform well on benchmark-oriented scenarios but exhibit limitations in complex, framework-driven applications, while tools employing deeper, language-native semantic analysis provide improved precision within narrower ecosystems. Overall, the study highlights structural trade-offs between accuracy, usability, and analytical depth in current SAST designs, offering practical insights for tool selection and identifying areas for future improvement.