M.S. Candidate: Olca Orakcı

Program: Multimedia Informatics

Date: 14.01.2026 / 13:00

Place: A-212

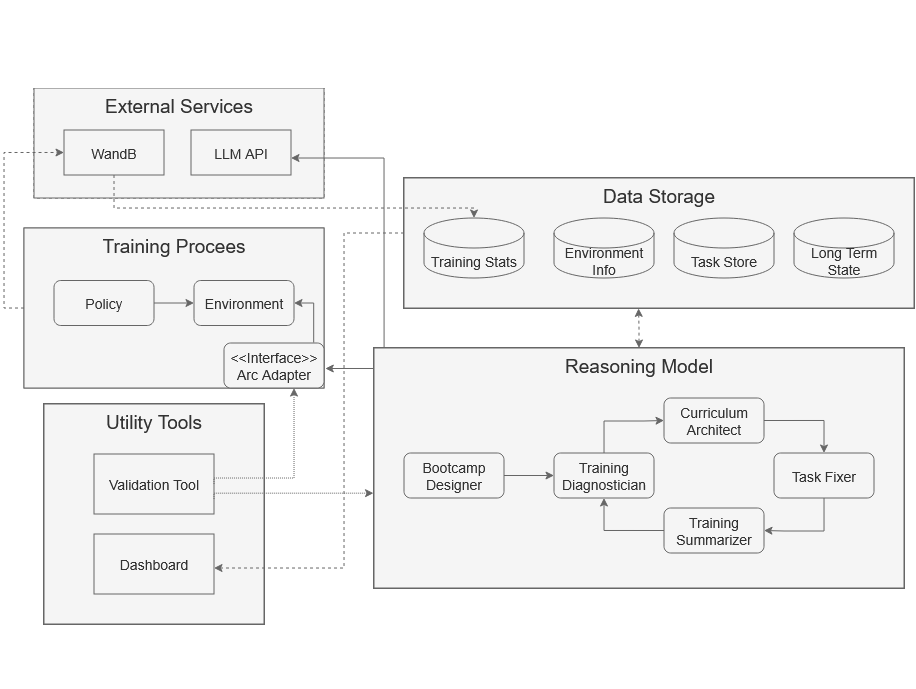

Abstract: Reinforcement Learning research has long sought to achieve generalization. The benchmark environments gradually evolved over the years to achieve this demanding task. As environments became more complex and generalization-oriented, this introduced new research fields, such as Open-Ended Learning (OEL). OEL is the study of learning settings in which a large set of skills exists, some of which may or may not be required for each situation represented in the environment. These complex environments necessitated policies that did not memorize environments but learned generalized skills. With the development of methods like Automated Curriculum Learning (ACL), this challenging task became more achievable. The focus of this thesis is to introduce a standardized way to use Large Language Models (LLMs) as an ACL method, called Adaptive Reasoning Curriculum (ARC), in a novel, high-performing, sample-efficient, and reproducible manner, and to demonstrate the method’s effectiveness in a state-of-the-art massively multi-agent OEL environment, Neural MMO 2. The results of the experiments show that the ARC framework outperforms both an expert curriculum and several ACL methods across average return and sample efficiency. With auxiliary helper tools, a dashboard, and a validation tool, ARC framework aims to make OEL research with LLMs available to all researchers and enthusiasts through open source, standardized, and shareable adapters, orchestrators, and experiment files.