M.S. Candidate: Atıl İleriaalkan

Program: Multimedia Informatics

Date: 02.09.2019 / 11:00

Place: A-212

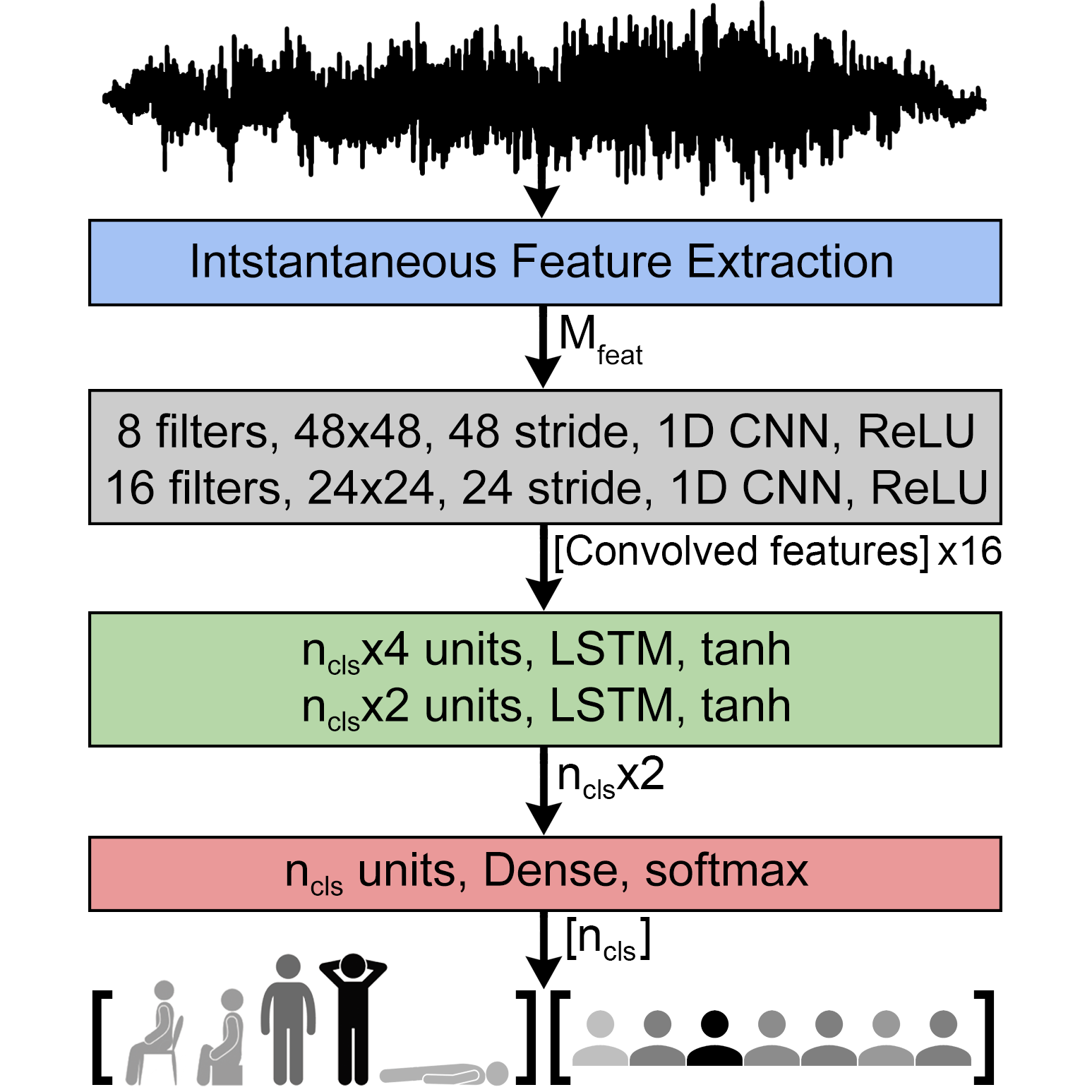

Abstract: Acoustic features extracted from speech are widely used for problems such as biometric speaker identification or first-person activity detection. However, use of speech data raises the concerns about privacy due to the explicit availability of the speech content. In this thesis, we propose a method for speech and posture classification using only breath data. The acoustical instantaneous side information was extracted from breath instances using the Hilbert-Huang transform. Instantaneous frequency, magnitude, and phase features were extracted using intrinsic mode functions and different combinations of these were fed into our CNN-LSTM network for classification. We also created our publicly available breath dataset, BreathBase for both our experiments in the thesis and future work. Our dataset contains more than 4600 breath instances detected on the recordings of 20 participants reading pre-prepared random pseudo texts in 5 different postures with 4 different microphones. Using side information acquired from breath sections of speech, 56% speaker classification and 96% posture classification accuracy is obtained among 15 speakers with this method. Our network also outperformed various other methods such as support vector machines, long-short term memory and combination of k-nearest neighbor and dynamic time warping techniques.