M.S. Candidate: Beyza Ecem Erce

Program: Data Informatics

Date: 26.05.2025 / 15:00

Place: A-212

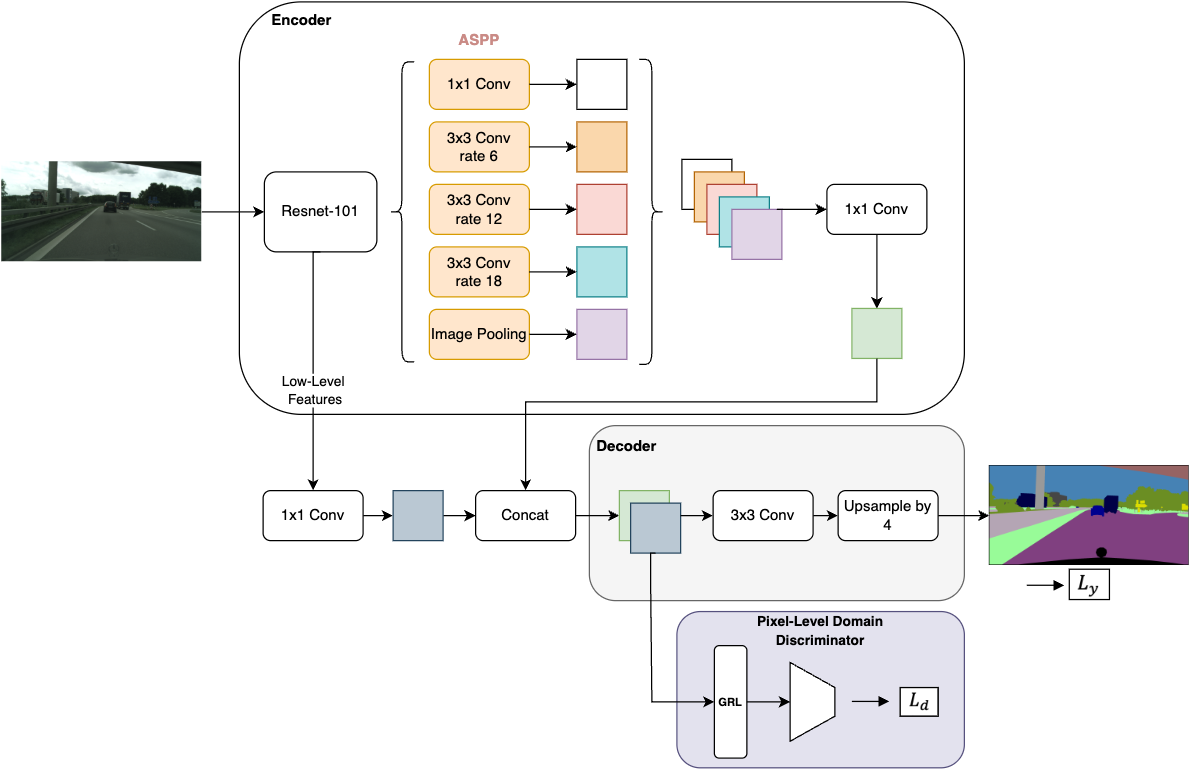

Abstract: Semantic segmentation involves assigning a class label to each pixel in an image according to the category of object or region it represents. Training a machine learning model for semantic segmentation using supervised learning requires a large dataset of images with pixel-level annotations. However, creating these detailed annotations is a significant challenge due to the time and effort required for precise labeling. This thesis aims to address this issue by employing an adversarial domain adaptation technique to train a semantic segmentation model using synthetic images with corresponding pixel labels and adapting it to real-world images. The proposed model consists of a semantic segmentation module based on DeepLabV3+ and an adversarial domain adaptation module using a Domain-Adversarial Neural Network (DANN). The model supports two training settings: unsupervised domain adaptation (UDA), where no labeled real-world images are available, and semi-supervised domain adaptation (SSDA), where only a limited number of labeled real-world images are available. A series of experiments have been conducted to evaluate various strategies for incorporating labeled real-world images into the training process, and the most effective method for SSDA is identified and proposed. Our results demonstrate that the proposed SSDA approach, using only a small set of labeled real-world images alongside a large dataset of labeled synthetic images, can achieve the performance of a DeepLabV3+ model trained with 12.5 times more labeled real-world images using standard supervised learning, without domain adaptation. This represents a 92% reduction in the amount of annotated data required to achieve comparable performance.