M.S. Candidate: Sefa Burak Okçu

Program: Cognitive Science

Date: 26.01.2023 / 09:30

Place: B-116

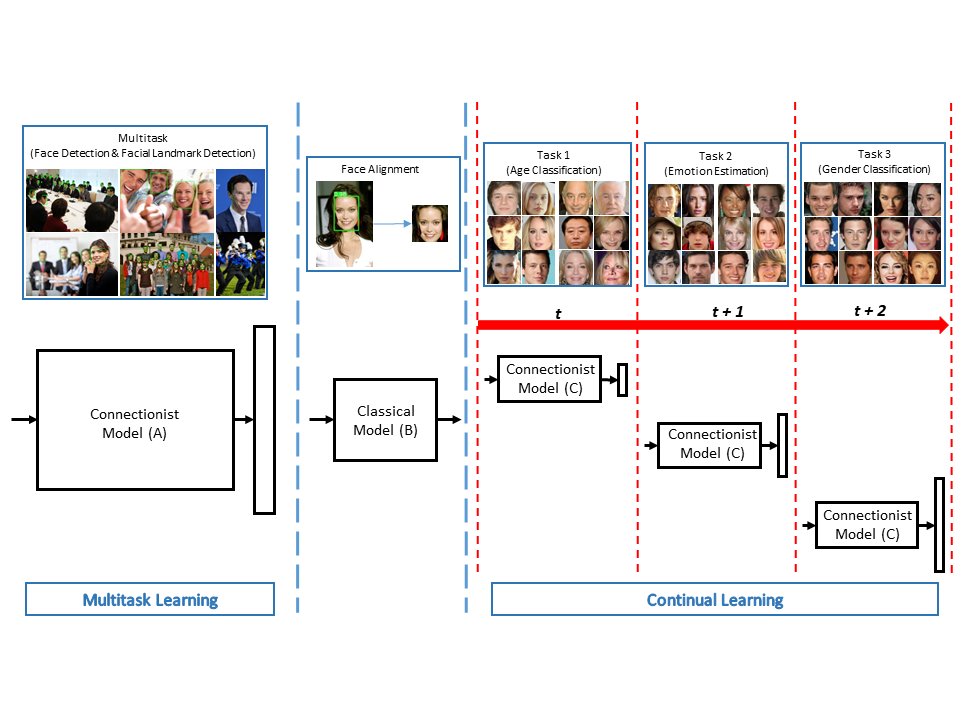

Abstract: The phenomenon known as catastrophic forgetting is common in the connectionist models while learning from a sequence of data from different distributions. On the other hand, the human brain has the ability to learn from a sequence of experiences continually while retaining old information. Recent studies utilize different brain-inspired methods such as regularization, parameter isolation, and replay to alleviate this problem in artificial systems. Following the previous studies, we investigated different continual learning methods on face analysis tasks involving age estimation, binary gender recognition, emotion recognition, and face recognition. Neurological findings implicate that there are different specialized functional and neural areas in the brain for the perception of faces. Similarly, we analyzed face analysis in two stages, very common in artificial neural networks: face detection and face attributes analysis. Firstly, experiments for learning face detection and facial landmark detection were conducted by studying multitask learning. Secondly, some continual learning methods inspired by biological systems were leveraged to overcome catastrophic interference in artificial models. In the first experiments, our proposed model was able to learn both face and facial landmark detection efficiently, along with a performance boost. In the later experiments, we observed that the utilized continual learning methods performed better on task incremental scenarios than class incremental scenarios. Nevertheless, a combination of two different continual learning methods resulted in remarkable performance improvement in class incremental scenarios. As a result, the combination of different alternative neuroscience-inspired methods is required for mitigating forgetting and approaching multitask performance.