Olca Orakcı, An LLM-Driven Framework for Automatic Curriculum Learning to Enhance Generalization in Open-Ended Reinforcement Learning

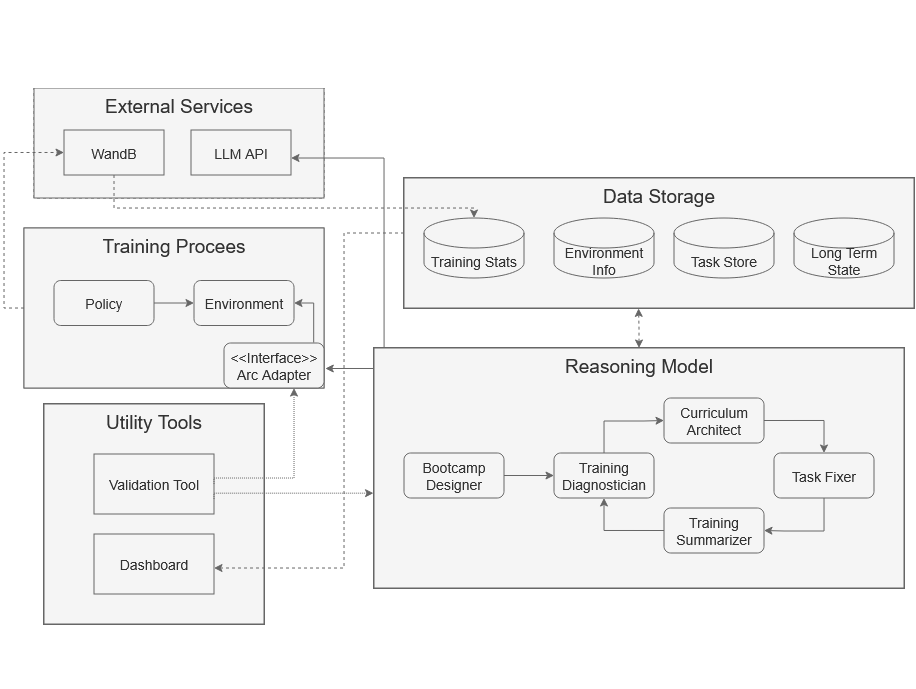

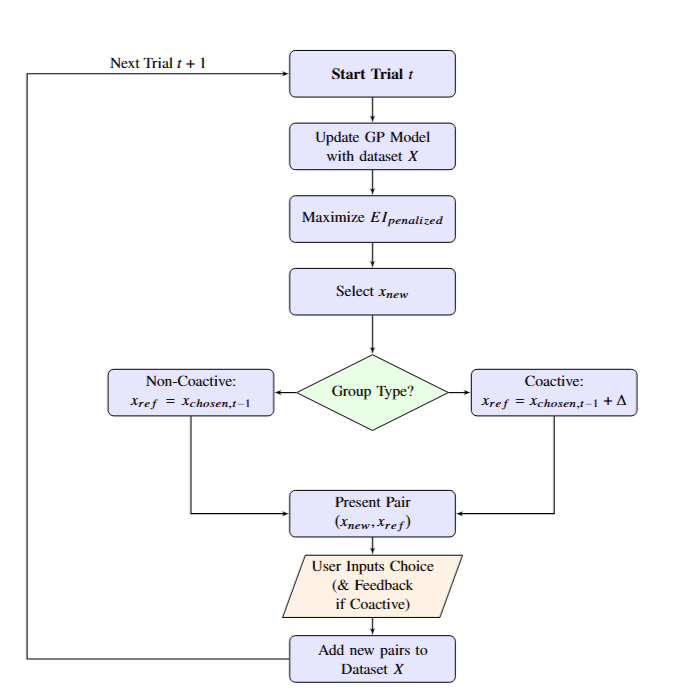

This thesis introduces the Adaptive Reasoning Curriculum (ARC), a standardized framework that uses Large Language Models (LLM) for Automated Curriculum Learning (ACL). Designed to improve generalization in complex Open-Ended Learning (OEL) environments like Neural MMO 2, ARC facilitates efficient skill acquisition rather than environment memorization. Experiments demonstrate that ARC significantly outperforms expert-curricula and existing ACL methods across average return and sample efficiency. By providing open-source adapters and auxiliary tools, ARC establishes a reproducible, accessible standard for integrating LLMs into multi-agent RL, fostering broader innovation in open-ended research.

Date: 14.01.2026 / 13:00 Place: A-212