PhD. Candidate: Gürol Canbek

Program: Information Systems

Date: 02.09.2019 13:00 p.m

Place: Conference Hall 01

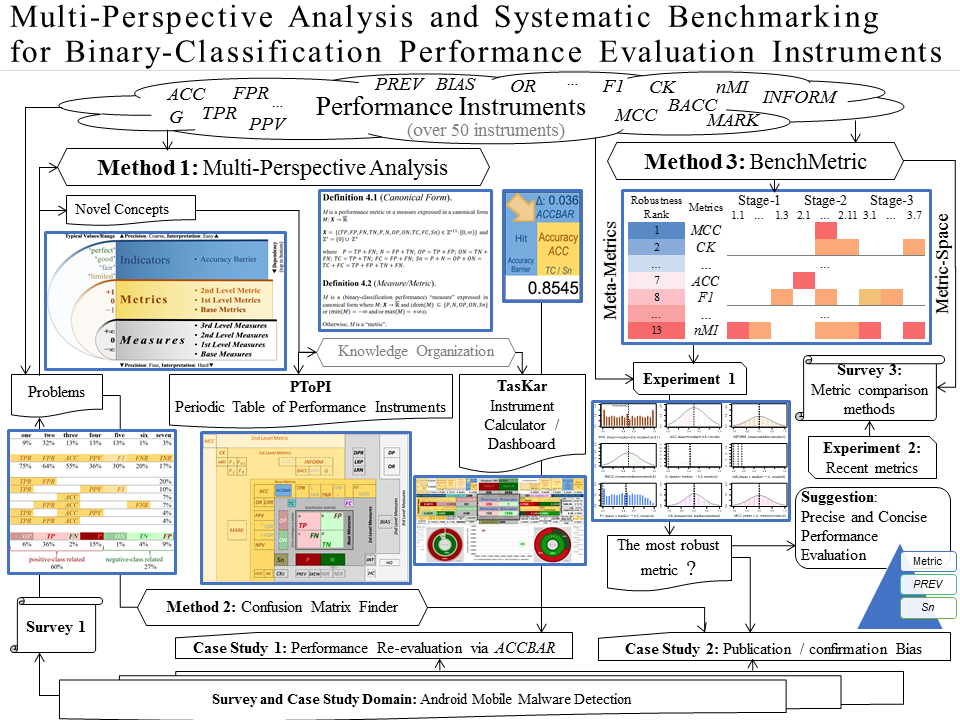

Abstract: This thesis proposes novel methods to analyze and benchmark binary-classification performance evaluation instruments. It addresses critical problems found in the literature, clarifies terminology and distinguishes instruments as measure, metric, and as a new category indicator for the first time. The multi-perspective analysis introduces novel concepts such as canonical form, geometry, duality, complementation, dependency, and leveling with formal definitions as well as two new basic instruments. An indicator named Accuracy Barrier is also proposed and tested in re-evaluating performances of surveyed machine-learning classifications. An exploratory table is designed to represent all the concepts for over 50 instruments. The table’s real use cases such as domain-specific metrics reporting are demonstrated. Furthermore, this thesis proposes a systematic benchmarking method comprising 3 stages to assess metrics’ robustness over new concepts such as meta-metrics (metrics about metrics) and metric-space. Benchmarking 13 metrics reveals critical issues especially in accuracy, F1, and normalized mutual information conventional metrics and identifies Matthews Correlation Coefficient as the most robust metric. The benchmarking method is evaluated with the literature. Additionally, this thesis formally demonstrates publication and confirmation biases due to reporting non-robust metrics. Finally, this thesis gives recommendations on precise and concise performance evaluation, comparison, and reporting. The developed software library, analysis/benchmarking platform, visualization and calculator/dashboard tools, and datasets were also released online. This research is expected to re-establish and facilitate classification performance evaluation domain as well as contribute towards responsible open research in performance evaluation to use the most robust and objective instruments.