M.S. Candidate: Berat Tuna Karlı

Program: Information Systems

Date: 23.12.2022 / 10:00

Place: A108

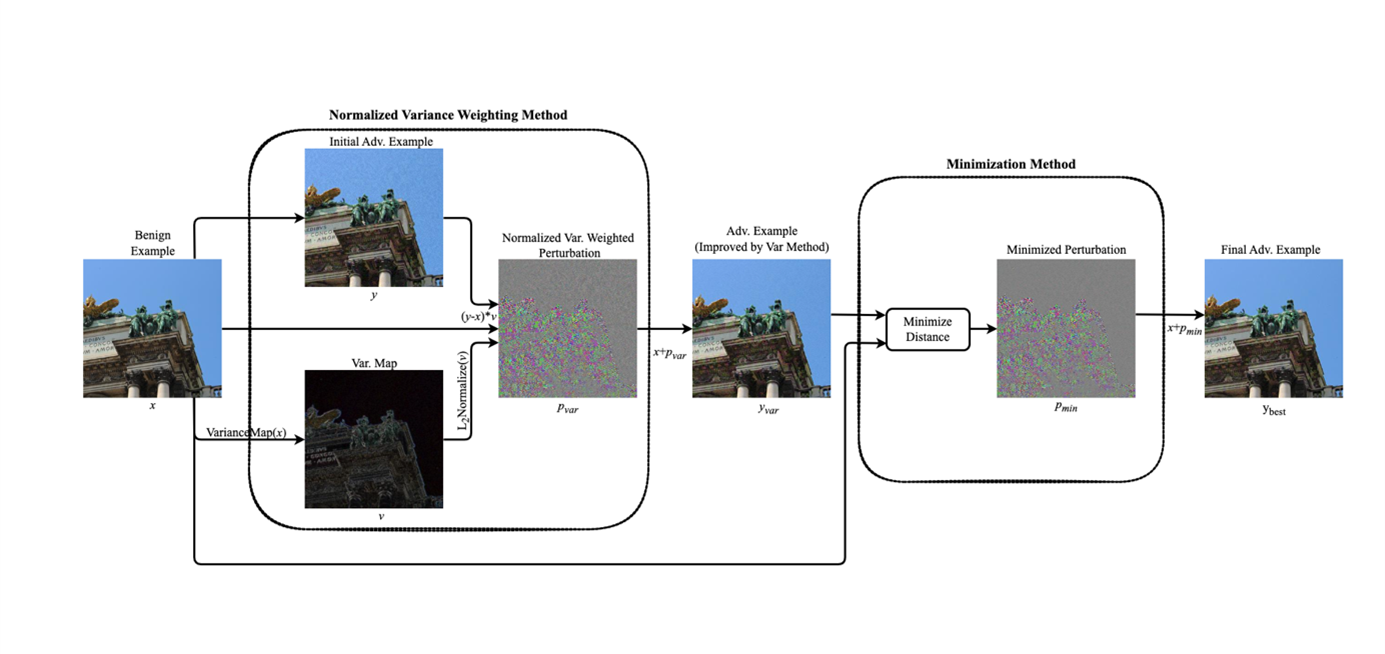

Abstract: Deep Neural networks (DNNs) are used in a variety of domains with great success, however, it has been proven that these networks are vulnerable to additive non-arbitrary perturbations. Regarding this fact, several attack and defense mechanisms have been developed; nevertheless, adding crafted perturbations has a negative effect on the perceptual quality of images. This study aims to improve the perceptual quality of adversarial examples independent of attack type and the integration of two attack agnostic techniques is proposed for this purpose. The primary technique, Normalized Variance Weighting, aims to improve the perceptual quality of adversarial attacks by applying a variance map to intensify the perturbations in the high-variance zones. This method could be applied to existing adversarial attacks without any additional overhead except a matrix multiplication. The secondary technique, the Minimization Method, minimize the perceptual distance of the successful adversarial example to improve its perceptual quality. This technique could be applied to adversarial samples generated using any type of adversarial attack. Since the primary method is applied during the attack and the secondary method is applied after the attack, these two separate methods could be used together in an integrated adversarial attack setting. It is shown that adversarial examples generated from the integration of these methods exhibit the best perceptual quality measured in terms of the LPIPS metric.