M.S. Candidate: Ezgi Çavuş

Program: Information Systems

Date: 21.08.2025 / 14:00

Place: A-212

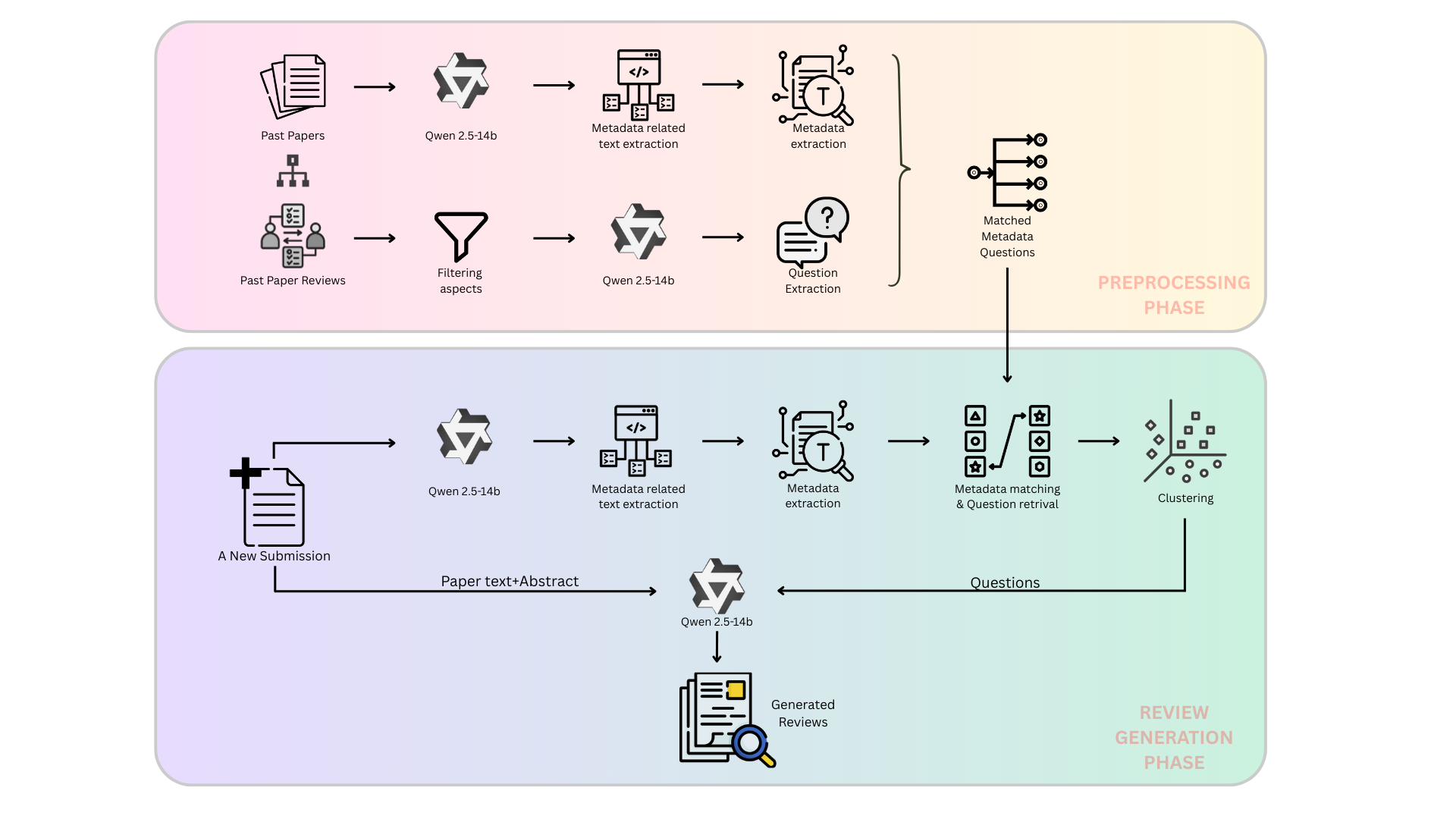

Abstract: Large Language Models (LLMs) are increasingly used to support academic evaluation, yet they often struggle to capture the domain-specific criteria essential for rigorous peer review. While existing reviewer guidelines and research checklists address general concerns such as reproducibility, ethical considerations, acknowledgment of limitations, and societal impact, they often neglect discipline-specific criteria that vary with a paper’s methodology, or evaluation strategy. This thesis introduces a framework that automatically extracts domain-specific review questions from past evaluations in the OpenReview system and aligns them with new submissions using structured metadata derived from paper content, including methodology, datasets, and evaluation metrics. Experimental results show that the framework improves the specificity and contextual relevance of generated reviews. Compared to baseline models, it allows more precise control over the content and focus of generated reviews by explicitly grounding them in metadata and past evaluation patterns, even when overall performance metrics are similar. The system improves traceability, reduces hallucinated content, and increases the interpretability of automated peer review.