M.S. Candidate: İbrahim Ethem Deveci

Program: Cognitive Science

Date: 23.01.2024 / 11:00

Place: B-116

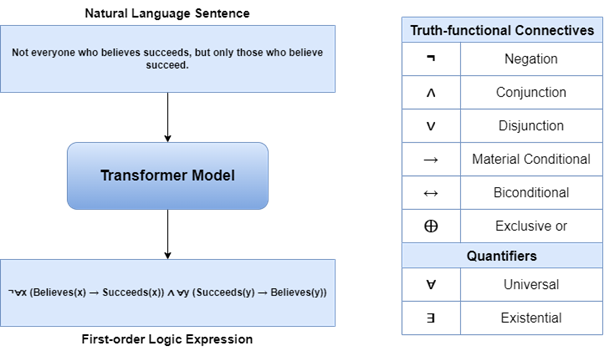

Abstract: Translating natural language sentences into logical expressions has been a challenging task due to contextual information and the variational complexity of sentences. The task is not a straightforward process to be handled by rule-based and statistical methods in artificial intelligence. In recent years, a new deep learning architecture, namely the Transformer architecture, has been providing new ways to handle what was hard or seemed impossible in natural language processing tasks. The Transformer architecture and language models that are based on it revolutionized the artificial intelligence field of research and changed how we approach natural language processing tasks. In this thesis, we conduct experiments to see whether successful results can be achieved using Transformer models in translating sentences into first-order logic expressions. To evaluate Transformer models’ ability for generalization, we provide examples from argumentative texts that are written in the scientific domain.