Gonca Tokdemir Gökay, A Success Assessment Model and Methodology for Data Science Projects

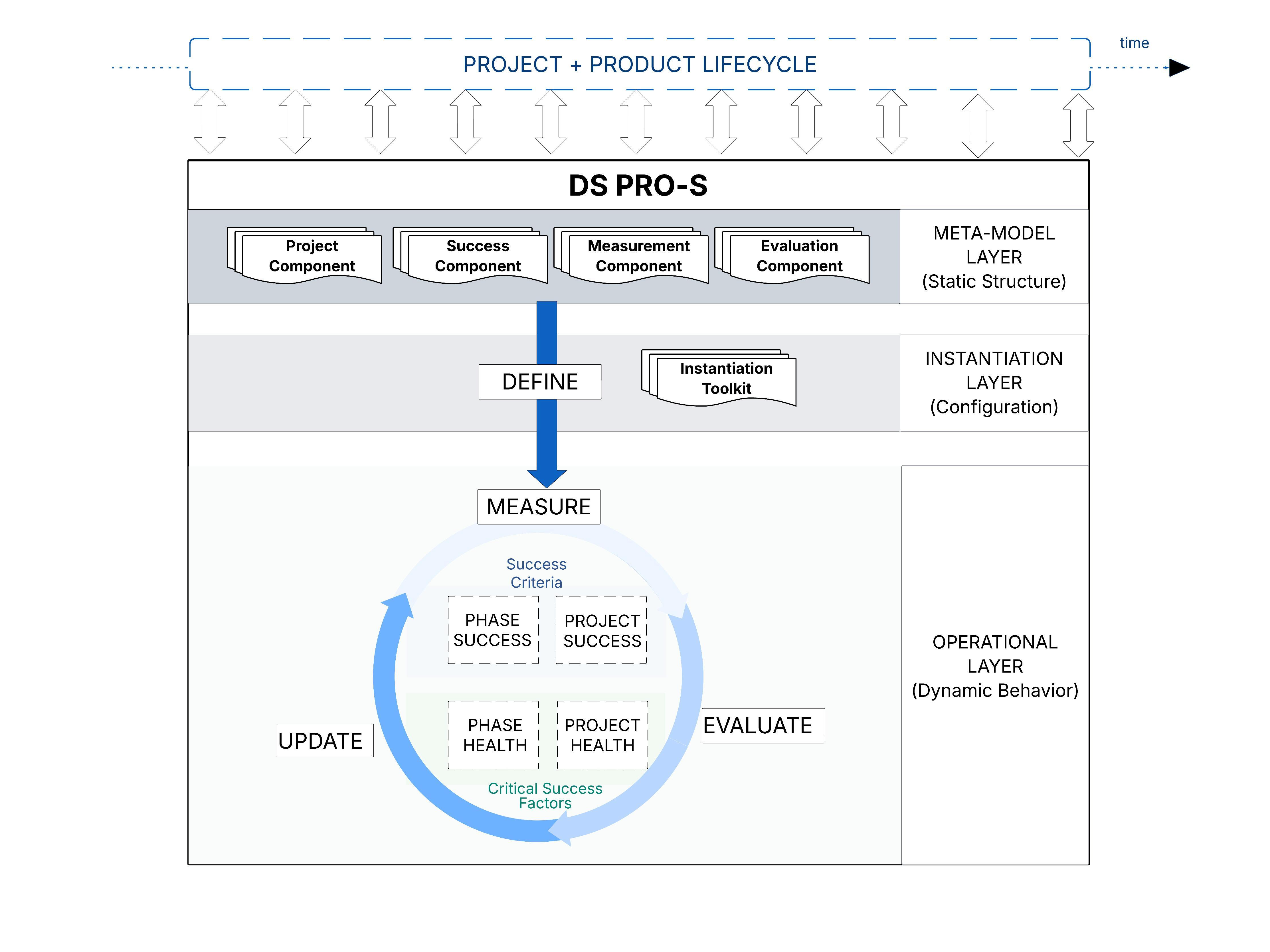

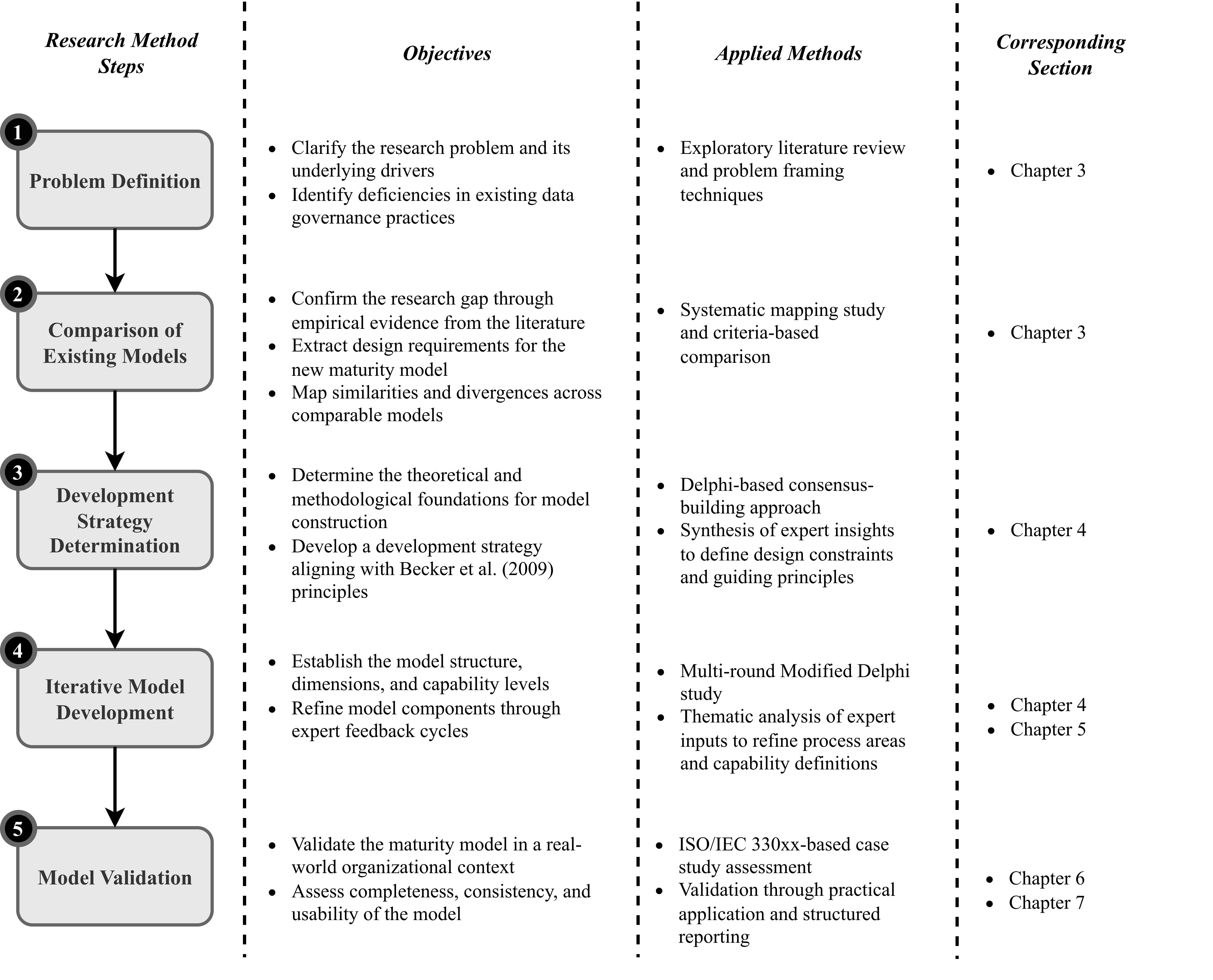

This research addresses a persistent paradox in the digital economy: While data is increasingly recognized as a strategic asset, data science projects designed to leverage its potential impact continue to suffer from high failure rates. As established in management theory, measurement is the prerequisite for improvement; without the ability to objectively assess success, organizations cannot effectively detect risks or optimize their initiatives. However, the current literature lacks a formalized, operationalizable success assessment model that accounts for distinct characteristics of data science projects and is applicable across diverse project types and contexts. To bridge this gap, this thesis develops the Data Science Projects Success Assessment Model (DS PRO-S). Adopting a Design Science Research (DSR) approach, the study constructs a holistic solution that functions as a meta-model, an instantiation toolkit, and a methodology to make project success explicit, measurable, and comparable. This architecture is supported by a rigorous mathematical formalization of measurement and evaluation, aligned with the ISO/IEC 15939 standard. By introducing evaluations at both project and phase levels and decoupling success (the achievement of objectives) from health (establishing the enabling conditions for success), DS PRO-S offers a modular and asynchronous assessment capability with operational flexibility. The applicability and usefulness of DS PRO-S were validated through expert interviews and multiple case studies.

Date: 21.01.2026 / 13:30 Place: A-212