Burak Büyükyaprak, Investigating The Semantic Similarity Effect On Delayed Free Recall Using Word Embeddings

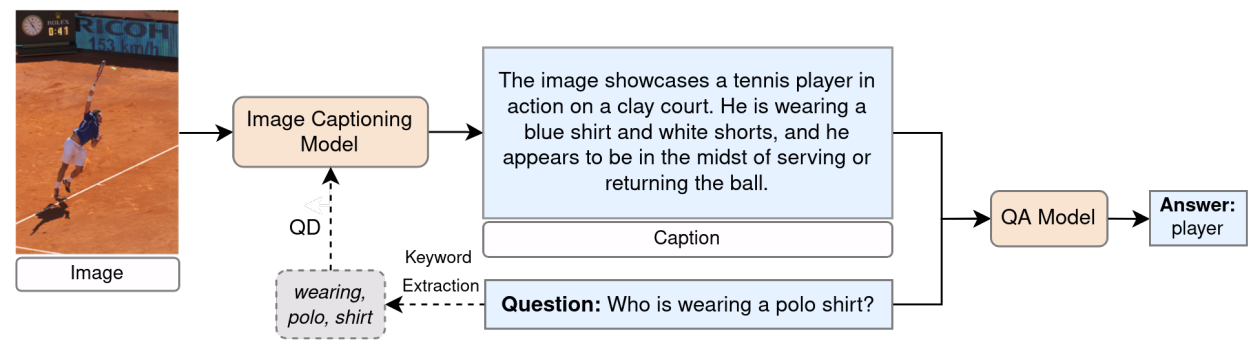

The thesis study "Investigating The Semantic Similarity Effect on Delayed Free Recall Using Word Embeddings," investigates how the semantic proximity effect, alongside the temporal proximity effect on delayed free recall. The current study uses fastText and word2vec for methodological purposes to outline the underlying cognitive mechanisms leading to the process of memory retrieval. By investigating the interplay between word meanings and memory performance, this study contributes to Cognitive Science and Psychology specifically in investigating language processing and human memory.

Date: 10.01.2025 / 13:00 Place: B-116