Caner Taş, Comparison of Machine Learning and Standard Credit Risk Models' Performances in Credit Risk Scoring of Buy Now Pay Later Customers

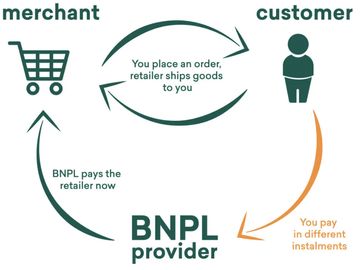

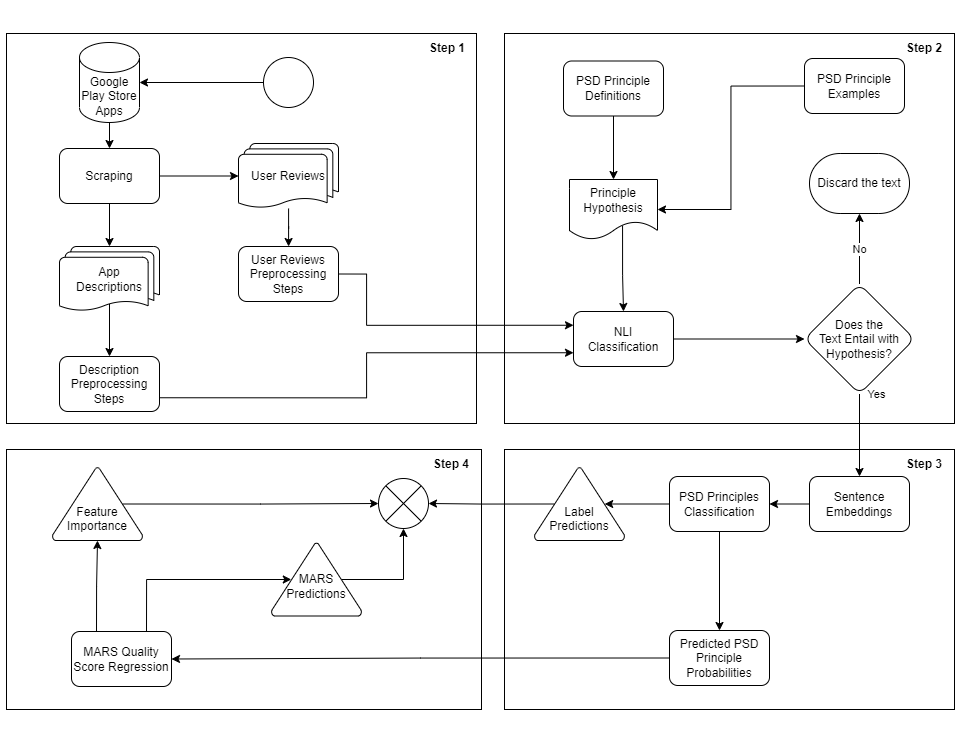

In this study, the performance of machine learning methods in credit risk scoring for "Buy Now Pay Later" customers is compared with the performance of standard credit risk models. Both traditional credit risk models and machine learning algorithms are evaluated using a real dataset. The comparison of models is conducted through variable selection, model training, and performance metrics. The results summarize to what extent machine learning methods outperform traditional models in credit risk assessment for "Buy Now Pay Later" customers. It is expected that this study will provide practical recommendations to improve risk assessment processes for financial institutions and credit providers.

Date: 14.07.2023 / 13:30 Place: A-212